Wish List Processing with Azure Service Fabric

Fun with Microservices

Originally Published on the Skyline Technologies Blog

Wish List Processing Today

Santa's Super Software Elves (S3E) are constanty looking to improve Santa's ability to process wish lists as painlessly as possible. It's pretty important to all of us at North Pole Central Operations that deserving kids throughout the world get just what they were hoping for Christmas every year. During one of our routine architecture review meetings, the team decided it was time to look into replacing our current Wish List Processing Services with something a bit more flexible and future-proof.

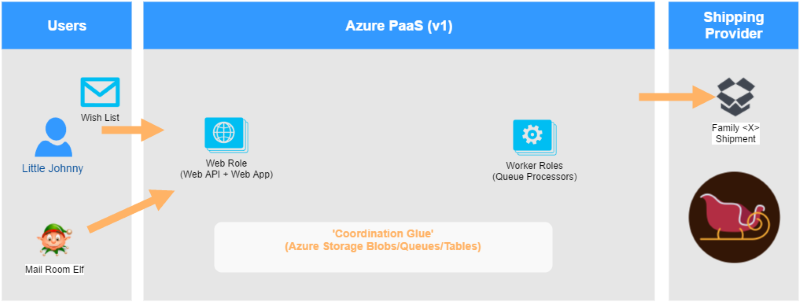

We here on S3E are certainly no slouches when it comes to technology, and we're willing to leverage 'The Cloud' in any way we can to make sure there are presents to open on Christmas Day. We were early adopters of Azure's Platform-as-a-Service Web and Worker roles (PaaS v1), exposing our wish list submission API to those enterprising kids of the world who wished to have direct access to Santa (Of course, we also provided a handy web application that the mail elves could use for manual entry as well). Our current Wish List Processing Service looks a bit like this.

PaaS v1 Layout

PaaS v1 LayoutAs you can see, there is a standard web / worker role layout with some 'glue' in between to hold the persistent data, and handle communications between the web and worker roles. In this case, we used the various Azure Storage technologies (Blobs, Queues and Tables) to provide that glue. Person data was stored in Azure Table Storage and Workflows were managed by populating the appropriate Storage Queues. The Worker Role(s) handled shuffling data around and making sure the millions of nice kids around the world had something to open Christmas morning.

The Limitations

While our current solution is working well, the scaling capabilities are pretty coarse grained. We can play around a bit with the number of instances of the Web and Worker roles, but we don't really have a way of utilizing any of the 'unused' compute power that our Web/Worker role instances may have. We also have to provide pretty much all the 'glue' code ourselves, making sure the two roles work together seamlessly. There's really no built-in facility to manage those occasional 'uh oh' moments (we have them here at the North Pole too). While PaaS v1 does have the concept of 'deployment slots', leveraging them to manage service health based rollback required a lot of manual scripting.

We also want to be as cost conscious as possible (we elves need to eat too), so getting more from less compute resources seemed like a no brainer. When utilizing Web/Worker roles, you 'scale' one or the other by essentially spinning up a new virtual machine, dedicated to that particular task. Any work sharing arrangement, similar to our current solution, requires manual coding. Getting more done per machine pretty much directly correlates to writing more code.

Enter Service Fabric

One of the promises of Azure Service Fabric is more efficient use of the resources that a given VM provides. This is done primarily by disconnecting the direct relationship that PaaS v1 had between a role instance and a VM. This does a couple things for us right away. First, it lowers deployment time, as the VM does not need to be re-provisioned as part of the deployment process. Secondly, it allows multiple services (roles in PaaS v1) to run on the same VM instance. By default, you don't have control over where your service deploys to, only the number of instances that are deployed. For those interested, however, there are knobs and switches you can use to, say, have a cluster of high CPU or high memory nodes that certain services are deployed to.

Service Fabric, like PaaS v1, does not require you to manually manage your VM instances / images. How this capability is delivered, however, is quite different than before. Service Fabric is really about leveraging a bunch of existing Azure technologies, rather than providing a 'standalone' solution as in PaaS v1. Service Fabric uses technologies like Azure Load Balancer, Azure Virtual Machine Scale Sets and Azure Virtual Networks to provide the operating environment for your services. When you configure a Service Fabric Cluster, whether via the Azure Portal or Azure Resource Manager Templates, you are asking the Azure infrastructure to setup a suite of existing technologies and glue them together into a cluster for you. You can manage then that cluster using the Azure Portal, Azure Command Line Tools or Azure Powershell Cmdlets.

Service Fabric Layout

Service Fabric LayoutIn the diagram above, you can see some of the Azure technologies that are used to create a cluster. At the core, a Virtual Machine Scale Set (VMSS) is used to provide the nodes for the cluster. These nodes are linked together with an Azure Virtual Network, and secured with an SSL certificate that is pulled from Azure Key Vault. With a little additional work, you can secure the management of your cluster, including application deployments, with Azure Active Directory.

The fabric is exposed to the public internet in a couple ways. An Azure Load Balancer sits in front of the fabric and routinely probes the nodes to determine which services (ports) are available on each node in the system (this includes any custom application ports you may need). Secondly, Service Fabric Explorer gives you a window into your cluster to monitor the application(s) running there, and the general state of the fabric nodes. There are toggles that can be used to simulate node failure, loss of state data, etc, so you can safely test failure scenarios easily.

Service Fabric - Service Types

Before we get into the nuts and bolts of the new proposed solution, it will be good to get some vocabulary out of the way. While Service Fabric is really a general purpose 'cluster as a service', the Service Fabric SDK has a number of specific concepts that we'll be leveraging to make everything work.

Services

A Service Fabric 'Service' is a piece of code that runs within the cluster. Each service has address(es) (or endpoints) that can be called to invoke the service functions. All services expose their functionality by declaring and implementing an Interface and utilizing Service Listeners to receive requests from other nodes in the fabric (or from the internet through the load balancer). Services can be either stateful or stateless. Stateless services generally run with a copy on each node of your cluster, they may be truly stateless [just perform operations on data they receive], or state may be managed by an external resource [e.g. a database]. Stateful (or Reliable) services, on the other hand, have state that is managed by the service fabric runtime (this state is potentially further divided by partition).

Actors

A Service Fabric 'Actor' is a named instance of an object that lives in Service Fabric. Actors relate on a certain level to a 'Stateful Service', in that the actor state is managed by service fabric. However, Actors differ in that they are further managed in a turn or queue based manner. For example, if multiple requests are made to the same Actor type (e.g. ElfActor) with the same Actor ID (e.g. Joe), then that Actor will process the requests in the order they are received. Actors also have the ability to fire best effort events to anyone in the cluster that is listening.

Wish List Processing - Revisited

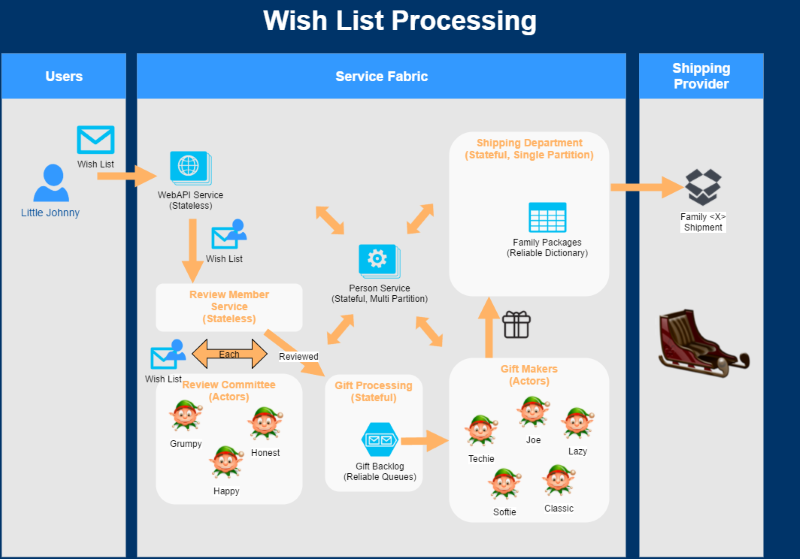

Finally, let's take a look at the proposed architecture for our new 'v2' Wish List Processing service. The elves in S3E have come up with the following high-level diagram of the services that will live in the cluster.

Wish List Layout

Wish List LayoutAs with our original solution, the primary 'user interface' is a Web API endpoint that runs in the cluster and is exposed to the public internet via the Azure Load Balancer. Future versions may bring over an updated version of the web application portion of the v1 Web Role as a separate service within the cluster. Since these are web apps/apis, they are hosted within a stateless service. When the service receives a new wish list, it looks up the associated person from the 'Person' service.

The Person Service is a stateful service, the service holds all known person data in an IReliableDictionary. This structure provides synchronized maintenance of the dictionary contents across all instances of the service. Furthermore, this particular stateful service is partitioned, which allows us to divide or shard the data into buckets so one set of service instances aren't responsible for all the service data.

Once the API has looked up and assigned the person to the incoming wish list, the list is sent to the

'Review Member Service', which is another stateless service. In this case, the set of reviewers

is defined in a configuration value that the service reads on startup. The service takes the incoming

wish list data and routes it to the 'Review Committee' actors, who review the list and approve items.

Once the Actor has reviewed the list, it is re-submitted to the Review Member Service. Once

all reviewers have voted on the list, the list is sent to the 'Gift Processing Service' so the approved

wish list items can be fulfilled.

The Gift Processing Service is a stateful service that holds a series of IReliableQueue instances, one for each type of gift. In addition to the normal interface-based set of operations, the Gift Processing Service has a persistent background process that reads data from these queues and delivers them to the appropriate Gift Maker Actor. Each Gift Maker elf can only make one gift at a time, and are only trained to make a single gift type, so Actors provide a good representation. Once the Gift Makers finish their work, they forward the wrapped gift to the 'Shipping Department', which is a single-partition stateful service which keeps an IReliableDictionary of families and their gifts, so the gifts can be bundled together and sent to the shipping provider, Santa's Sleigh Unlimited (or Amazon Prime Drone Delivery for the technically inclined).

Future Enhancements

We here at S3E rarely sleep, so our wish list of features is extensive. Some future enhancements that we're currrently investigating include:

Mail Elf Utility - Migration of the existing web application was not part of the scope of our original plan. Instead, we've updated the existing web application to point to the new Service Fabric backed services. In the future, we'd like to bring in the web app as a second 'application' in service fabric, primarily to maintain isolation and separate deployment packages. Service Fabric allows any number of applications to run within the fabric, so there's no problem there, and moving the app will allow us to decommission our existing web/worker roles completely.

Service Fabric Health Monitoring - We plan to use this to monitor the gift maker queue depth, to know when we should start training new Gift Makers to cope with demand. We also plan to leverage the automated application rollback capabilities that use health monitoring to determine whether or not a new version of your application is 'healthy'. If the upgrade causes these health checks to fail, the upgrade is automatically cancelled and rolled back to a previous working state. This will allow us to extend our continuous deployment model that we use in the test / staging environments all the way to the production system.

Santa's Naughty & Nice List - We plan to create a Web Application to manage the data in the Person Service such that Santa (and his fleet of Elves on the Shelf) can dynamically update the actual behavior rating of people registered with the service, compared with the self-reporting that we currently accept with the wish list submission process. Again, the plan here is to create a separately deployed application within the same cluster.

Reviewer Status Monitoring - We hope to start leveraging Actor Events to help better track how long reviewers are spending reviewing wish lists, and how long gift makers are taking to make particular gifts. We plan to link these data streams to Azure Stream Analytics so we can be alerted when reviews are taking too long (reviewing lists can be somewhat of a burnout job, even for elves), or which gifts seem to be the hardest to make (to increase gift maker training)

... and the list goes on, but Actor Timers / Reminders, Logging with EventSource and .NET Core are all on our research backlog. There is also a lot of opportunity to leverage other Azure services, like Azure Service Bus and Azure IoT Hub to automate even more of our operations.

Wrapping it up

At S3E and NPCO, we are always focused on continuous improvement. Moving wish list processing to Azure Service Fabric will address many of the scaling and coordination concerns that we have with our existing Web / Worker Role solution. The move will also open the door to further improvements with less code. Using the Service Fabric SDK and Visual Studio 2015 (and soon 2017) allows us to very quickly wire together our new microservices without needing to worry too much about the details of making them all play nice together. In the end, it's all about getting toys out to those millions of nice girls and boys (and a few parents too). Doing that more efficiently and with fewer operational headaches allows us elves a bit of needed respite, and maybe the occasional vacation to Maui too ;) (even elves have big dreams).